Every now and then someone inquires an industrial PC/server from us, asking for "two Ethernet ports that can do Teaming". We used to have some rather dated experience/theory, what Intel NIC chips are required for "teaming", but the growing uncertainty led us to re-test our former assumptions.

Indeed, the situation has changed a little. The rules are different between Windows XP (including 2003 server) and Windows 7+. And this is just speaking about Intel-"proprietary" ANS Teaming, taking place in their drivers. In Windows Server 2012 (but not in Win 8.1), Microsoft have introduced their own Teaming, independent of Intel. I'll debate the apparent differences in some further chapter.

The following table (fairly incomplete) is a record of our simple

test session. The tests were carried out using two Advantech

motherboards: ASMB-782G4 (Ivy Bridge) and AIMB-784G2 (Haswell)

and an older Intel motherboard (Core 2 Duo, Q35 chipset).

Plus some add-on NIC boards.

The test focused strictly on the Intel-proprietary ANS teaming.

| Adapter model Chip, Bus (driver version ==> ) |

XP 18.3 |

Win7 21.1 |

| Intel PRO 1000 GT Desktop i82541PI, PCI |

2nd | N/A |

| Intel PRO 1000 MT Dual-Port Server i82546GB, PCI |

1st | 2nd |

| Intel i82566DC PCI-e/LOM |

1st | Unt. |

| Intel PRO 1000 CT Desktop i82574L, PCI-e |

1st | 1st |

| Intel Gigabit ET Dual-Port Server i82576GB, PCI-e |

1st | 1st |

| Intel i82579LM PCI-e/LOM |

1st | 1st |

| Intel Gigabit I350-T2 Server (dual port) i350, PCI-e |

1st | 1st |

| Intel i211 PCI-e |

Unt. | 1st |

| Intel i217LM PCI-e/LOM |

Unt. | 1st |

Glossary:

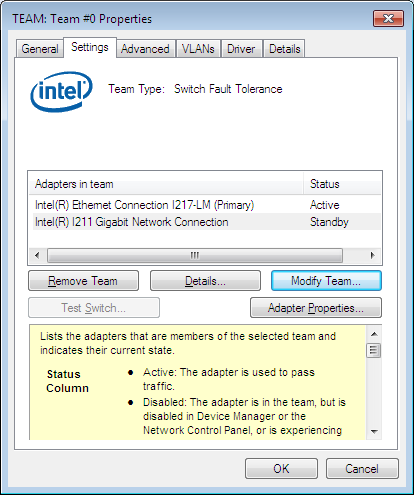

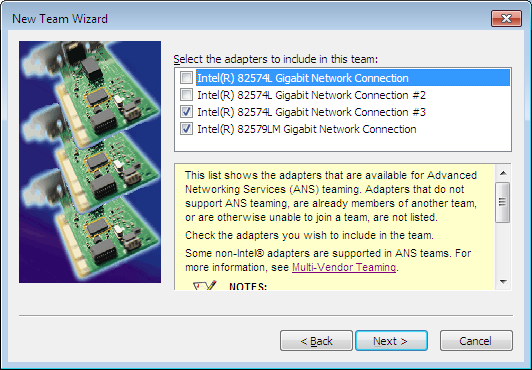

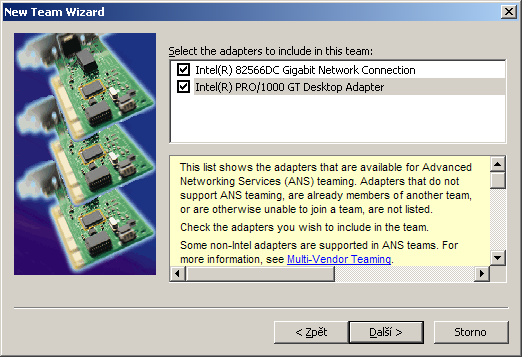

You need at least one "team member first class" in the system,

to be able to configure teams. In order to find out, if a particular

Intel NIC is "1st class", open the device manager, find the particular

NIC device and check the "Properties" dialog. If there is a "Teaming" tab,

the respective Intel NIC is a 1st-class NIC for the purposes of Teaming.

Its Teaming tab allows you to access the teaming functionality.

Apparently, "2nd class" NIC's do not have the Teaming tab in their

own properties dialog, but do get listed/proposed in the "new team wizard"

(once you start that from some 1st-class NIC).

Note that there are also NIC's that do have a driver in the system

and can work in a stand-alone fashion, but do not have a Teaming tab

and are not proposed as possible members in the "new team wizard."

These NIC's are unsupported by the Intel driver's Teaming subsystem.

These can be low-end Intel NIC's or 3rd-party adaptors.

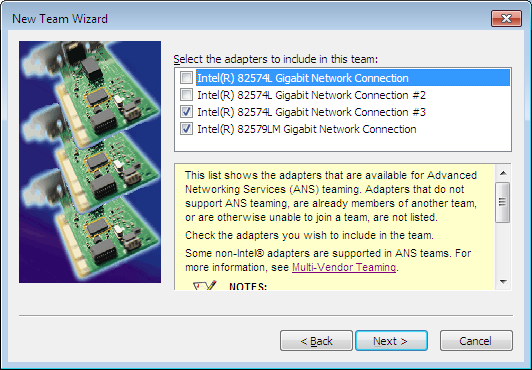

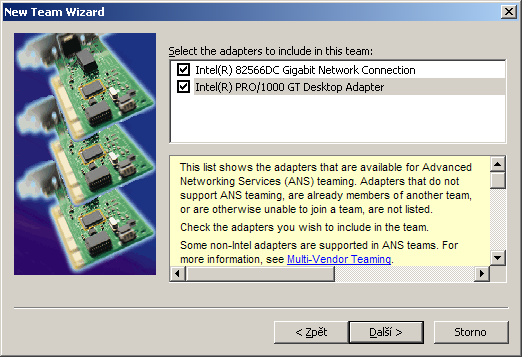

In other words, in order to distinguish between a 2nd-class teamable NIC

and a non-teamable NIC, you need to find a 1st-class teaming NIC

and start a "new team wizard", to see if other NIC's in the system

get proposed as possible team members.

In the golden era of XP/2003 (and the vintage parallel PCI, and proper

in-house IT), NIC's labeled "desktop" (or being a LOM on a "desktop" motherboard)

were typically eligible as 2nd-class Team members, while any "Server"

adaptor was automatically a 1st-class teamable NIC.

Today in Win7+, pretty much any current Intel NIC (this would be

a PCI-e gigabit chip) is a 1st-class team member, older PCI/PCI-X

NIC's are apparently 2nd class, and older Intel PCI desktop NIC's

(including gigabit models) are not eligible for Intel teaming at all.

The teaming wizard may occasionally yap at you, that some feature

(e.g. jumbo) is not available on a particular team member, and hence

the whole team will be deprived of that particular feature

(but otherwise perfectly functional). If you face this and

you do need Jumbo, check the driver properties of the individual

member NIC's in your team, maybe they're all capable of Jumbo,

but it's disabled by default (per port) by the driver

and just needs to be enabled (=configured).

Note that some historical updates prevented teaming from working

in Windows 10 (the problem has been fixed by further Windows updates).

If you manage to boot XP on a modern Intel-based PC motherboard,

most likely any Intel NIC's in the system will be considered 1st-class

teamable hardware by Windows XP - as long as the modern NIC is

supported by the final release Intel NIC driver for XP/2003 (version 18.3),

which is already a little dated at the time of this writing.

Note that Intel seems to have dropped support for older PCI/PCI-X NIC's from its current driver version (that no longer supports XP/2k3). Interestingly, the NIC's still typically pop up with a driver installed automagically - but this will be a stock Windows driver, some older version originally from Intel, adopted by Microsoft earlier on into the Windows installer. If you download a current driver from Intel, its .EXE installer will apply/update the current driver on all the modern NIC's that it supports, but will leave any "old and no longer supported" Intel NIC's running with the stock Windows driver.

Some of the Intel teaming guides also mention "multiple vendor Teaming",

but no particular other Vendor or NIC is ever mentioned.

At the same time, the "new team wizard" may propose NIC's that are

actually running with a stock driver from the Windows installer,

i.e. an older Intel driver "resealed" and "provided by Microsoft"

as part of the Windows installation media (or Windows Update).

Maybe the "multi-vendor Teaming" is merely a euphemism for limited

interoperability with older Intel NIC's ? ;-) Google can find

a

historical forum post from an Intel employee, admitting that

no other vendor has been "certified" yet.

The feature set of the team (e.g. jumbo support) is limited by the

"lowest common denominator", i.e. if one NIC in a team does not

support jumbo and all the others do, the team as a whole will not

support jumbo frame sizes.

Also, if you want teaming to work properly, especially multi-vendor,

you may want to disable some TCP/UDP upper-level offload features

(HW acceleration) as that typically relies on having all the

packets in a sequence pass through a single NIC chip. (Or the

teaming stack will disable/prevent those features automatically.)

The stock Intel NIC drivers, those shipped by Microsoft as part of the Windows instal media, do not come with the Intel teaming functionality. To get Teaming, you have to download the driver package (EXE installer) from Intel. Even that way, apparently the Teaming stuff is not "plug and play" ready. Thus, if you add another "known teamable" NIC adaptor later on, you may find out that the Teaming tab has not popped up in the device properties, even though the basic driver is installed and works just fine. The recommended bullet-proof procedure of getting Teaming to work on your (supported) Intel NIC's is: install all the hardware first, and only when all the PCI NIC devices are in place, install the Intel driver (run the EXE installer). If you've already installed the driver (oops! heelp!), you should manually uninstall the Intel software package (it's a single entry in the "programs and functions" control panel) and run the Intel driver installer again.

Generally if you start messing with two motherboards and several

plug-in cards, you may face weird behaviors - what appear to be

bugs in the Intel driver. If you have 5 or more Ethernet ports

in the system, or you keep liberally swapping cards and moving them

around in slots a lot, the Intel driver can easily get confused.

Just keep in mind that the same chip (PCI Vendor+Device ID),

if inserted in a different PCI(-e) slot, will create another

PCI device and NIC instance in the Windows registry.

You can easily get several dozen NIC instances (active

and dormant) in the registry, and especially the Intel driver

in Windows doesn't seem to be very well tested for this use case :-)

Also, keep in mind that Intel have to maintain their driver

in the context of compatibility across several Windows versions,

and each Windows version (or even just a service pack) may bring

and does bring slight differences in the internal workings

of the various API's involved... so it may actually be Windows,

having a problem trying to connect all the dots.

Actually if you don't mess with the Ethernet ports too much,

this is a piece of cake compared to e.g. a printer installation

in Windows :-)

The Intel Ethernet hardware is nice in that legacies are

honored and there's generally a single common driver

for all Intel NIC's. Nevertheless, during the years

there is some unstoppable progress, the register-level

interface of the NIC hardware sometimes changes

in a big step (rather than by minor tweaks

and slow evolution). You can't stop progress.

Intel NIC's used to have MSI back in the parallel PCI

era, and once on PCI-e, they soon transitioned to

MSI-X, where a single NIC port can directly trigger

maybe 3 to 5 interrupt vectors (ISR routines),

e.g. the RX and TX directions each have an own IRQ or two

(per port!) and there's another "management" IRQ

for a good measure...

In the old days of parallel PCI, buffer sizes in the

NIC chip were large (64 kB or thereabouts) and described

in the chip datasheets. Desktop and server adaptors

differed in how the RX/TX buffer sizes were balanced.

With the arrival of PCI-e, the buffer size got a little

cryptic, possibly smaller, possibly because of the sheer

bandwidth available from PCI-e, combined with SG-DMA

and whatnot.

In the good old days of trusty Intel Gigabit NIC's

that you could swear on, jumbo support was automatic.

All the NIC's could do it, including the "low end"

desktop models. Later on came a time, in the era of

i945 to i4x series chipsets, when the PCI-e (and)

LOM chips typically did not support jumbo,

but there were a select few higher-end chips that

did. All premium=server adaptors did support jumbo,

as well as one tiny PCI-e chip to boldly challenge

the fat server brethren: the cheeky challenger was

the i82574L, a single-port low-power PCI-e x1

gigabit Ethernet chip, apparently meant for

"embedded" use in industrial fanless PC's etc.

It can be seen both onboard in industrial PC hardware,

as well as in the form of addon PCI-e boards (called

the Intel PRO 1000 CT desktop adaptor if memory serves).

Then the tide changed again and nowadays Jumbo support

seems universal in modern Intel PCI-e NIC chips,

including the i210/211/217/219 which feel like

low-end onboard/LOM chips... not to mention the i350

series server chips.

Another feature that has surfaced "out of nowhere"

is the IEEE1588 (client only) support in modern

Intel chips - suddenly available "across the board".

Let alone the not so recent addition of TCP offload

(and other offloads), PCI-e SR-IOV in the higher-end

server chips etc. The offloads and IOV typically

don't mix very well with Teaming BTW.

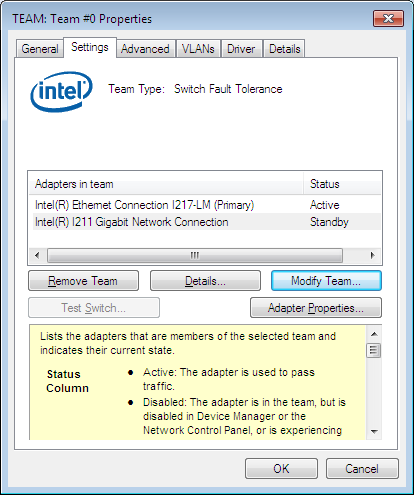

The Intel teaming framework supports about 5 different modes, some geared towards fault tolerance, others toward performance. Some only work against a single switch (e.g. the mode using the standard/open LACP protocol) while others work even with uplinks going to different switches (the mode called "Switch Fault Tolerance").

Microsoft mentions only two basic "configurations" of their teaming subsystem: "Switch-independent teaming" and "Switch-dependent teaming". The latter roughly correlates with 802.3ad/ax LACP.

Note that while Intel tends to mention STP in their

material on Teaming

and esp. the "Switch Fault Tolerance" mode,

if you look closer, the ports facing your teamed NIC's

are supposed to have STP disabled, so the Intel teaming

stack does not seem to speak STP itself. The SFT mode

is not implemented as a soft-bridge speaking STP.

Microsoft do not mention STP in their teaming guide,

but that probably doesn't make a difference.

In other words, Intel's SFT mode actually feels rather similar to Microsoft's "switch-independent" configuration.

Both Intel and Microsoft also debate load-balanced configurations (for increased throughput) and both take care to explain that the load balancing is per flow, rather than per packet - to avoid TCP confusion by packet delivery reordering and other possible pitfalls in practical network operation.

Intel has always tried to draw an artificial boundary between

the desktop and entry-level server hardware (a marketing choice,

sometimes enforced by rules implemented in software). Hence their

decisions on what NIC you are allowed to use for teaming.

Microsoft makes no distinction between "server" and "desktop" NIC's,

and doesn't require or prefer any particular NIC chip vendor/brand

(is HW vendor-agnostic).

The Windows Server 2012 native teaming stack should work with pretty

much any NIC that has a basic driver available, bar some of the

accelerated features or jumbo frames (see above).

Talkback welcome: rysanek [at] fccps [dot] cz